* Updated the utils/run_exercise.py to allow exercises to customize host configuration from the topology.json file. Now hosts and `ping` each other in the basic exercise. Other Linux utilities should work as well (e.g. iperf). ``` mininet> h1 ping h2 PING 10.0.2.2 (10.0.2.2) 56(84) bytes of data. 64 bytes from 10.0.2.2: icmp_seq=1 ttl=62 time=3.11 ms 64 bytes from 10.0.2.2: icmp_seq=2 ttl=62 time=2.34 ms 64 bytes from 10.0.2.2: icmp_seq=3 ttl=62 time=2.15 ms ^C --- 10.0.2.2 ping statistics --- 3 packets transmitted, 3 received, 0% packet loss, time 2003ms rtt min/avg/max/mdev = 2.153/2.540/3.118/0.416 ms mininet> pingall *** Ping: testing ping reachability h1 -> h2 h3 h2 -> h1 h3 h3 -> h1 h2 *** Results: 0% dropped (6/6 received) ``` Only updated basic exercise, still need to update other exercises. Also, updated the root-bootstrap.sh because I was running into issues with latest version of vagrant. * Accidentially added the solution to the basic exercise in the previous commit. Undoing that here ... * Updated the topology.json file and table entries for the basic_tunnel exercise. * Updated P4Runtime exercise with new topology and table entries. * Fixed MAC addresses in P4Runtime exercise. It is working now. * Fixed MAC addresses in P4Runtime exercise starter code * Updated ECN exercise to use new topology.json file. Updated the table entries / MAC addresses as well. * Updated the topology.json file and table entries for the MRI exercise. * Updated source_routing exercise with new topology file and verified correct functionality. * Updated load_balance exercise with new topology. * Moved basic exercise triangle topology into a separate folder * Added new topology for the basic exercise: a single pod of a fat-tree. * Updated Makefiles and run_exercise.py to allow exercises to configure each switch with a different P4 program. This is mainly for the firewall exercise. * Updated Makefiles of project to work with new utils/Makefile * Updated load_balance and p4runtime exercise Makefiles * Initial commit of the firewall exercise, which is a simple stateful firewall that uses a bloom filter. Need to update README files * Initial commit of the path_monitor exercise. It is working but still need to update the README and figure out what we want the tutorial attendees to implement. * Updated README file in firewall exercise. Also removed the bits from the starter code that we want the tutorial attendees to implement * Renamed path_monitor exercise to link_monitor * Updated the README in the link_monitor exercise and removed the bits from the starter code that we want the tutorial attendees to implement. * Updated README for the firewall exercise * Adding pod-topo.png image to basic exercise * Added firewall-topo.png image to firewall exercise * Added link-monitor-topo.png to link_monitor exercise * Updated README files to point to topology images * Updated top-level README to point to new exercises. * Fixed link for VM dependencies script in README * Updated bmv2/pi/p4c commits * Updated README files for exercises to fix some typos and added a note about the V1Model architecture. * Added a note about food for thought in the link_monitor README * Updated the firewall.p4 program to use two register arrays rather than a single one. This is to make the design more portable to high line rate devices which can only support a single access to each register array. * Minor fix to firewall exercise to get rid of compiler warning. * Updated comment in firewall exercise. * Minor (typo) fixes in the firewall ReadMe * More info in firewall exercise ReadMe step 2 * Updated firewall.p4 to reuse direction variable * More testing steps, small fixes in firewall exercise Readme * Added food for thought to firewall Readme * Cosmetic fixes to firewall ReadMe * Made a few updates to the basic exercise README and added more details to the link_monitor exercise README. Also added a command to install grip when provisioning the VM. This could be useful for rendering the markdown README files offline. * Updated top level README so it can be merged into the master branch. * Moved cmd to install grip from root-bootstrap to user-bootstrap

Implementing MRI

Introduction

The objective of this tutorial is to extend basic L3 forwarding with a scaled-down version of In-Band Network Telemetry (INT), which we call Multi-Hop Route Inspection (MRI).

MRI allows users to track the path and the length of queues that every packet travels through. To support this functionality, you will need to write a P4 program that appends an ID and queue length to the header stack of every packet. At the destination, the sequence of switch IDs correspond to the path, and each ID is followed by the queue length of the port at switch.

As before, we have already defined the control plane rules, so you only need to implement the data plane logic of your P4 program.

Spoiler alert: There is a reference solution in the

solutionsub-directory. Feel free to compare your implementation to the reference.

Step 1: Run the (incomplete) starter code

The directory with this README also contains a skeleton P4 program,

mri.p4, which initially implements L3 forwarding. Your job (in the

next step) will be to extend it to properly prepend the MRI custom

headers.

Before that, let's compile the incomplete mri.p4 and bring up a

switch in Mininet to test its behavior.

-

In your shell, run:

makeThis will:

- compile

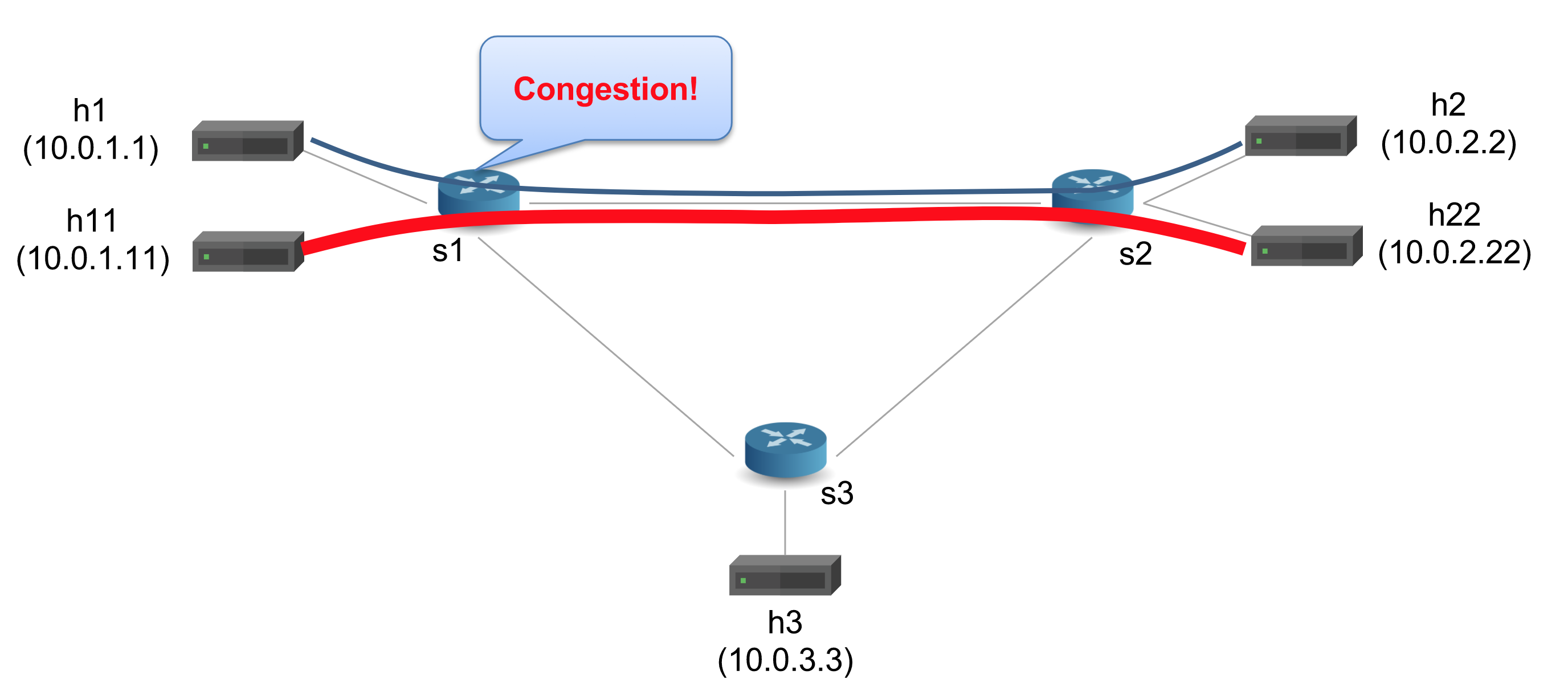

mri.p4, and - start a Mininet instance with three switches (

s1,s2,s3) configured in a triangle. There are 5 hosts.h1andh11are connected tos1.h2andh22are connected tos2andh3is connected tos3. - The hosts are assigned IPs of

10.0.1.1,10.0.2.2, etc (10.0.<Switchid>.<hostID>). - The control plane programs the P4 tables in each switch based on

sx-runtime.json

- compile

-

We want to send a low rate traffic from

h1toh2and a high rate iperf traffic fromh11toh22. The link betweens1ands2is common between the flows and is a bottleneck because we reduced its bandwidth to 512kbps in topology.json. Therefore, if we capture packets ath2, we should see high queue size for that link.

-

You should now see a Mininet command prompt. Open four terminals for

h1,h11,h2,h22, respectively:mininet> xterm h1 h11 h2 h22 -

In

h2's xterm, start the server that captures packets:./receive.py -

in

h22's xterm, start the iperf UDP server:iperf -s -u -

In

h1's xterm, send one packet per second toh2using send.py say for 30 seconds:./send.py 10.0.2.2 "P4 is cool" 30The message "P4 is cool" should be received in

h2's xterm, -

In

h11's xterm, start iperf client sending for 15 secondsiperf -c 10.0.2.22 -t 15 -u -

At

h2, the MRI header has no hop info (count=0) -

type

exitto close each xterm window

You should see the message received at host h2, but without any

information about the path the message took. Your job is to extend

the code in mri.p4 to implement the MRI logic to record the path.

A note about the control plane

P4 programs define a packet-processing pipeline, but the rules governing packet processing are inserted into the pipeline by the control plane. When a rule matches a packet, its action is invoked with parameters supplied by the control plane as part of the rule.

In this exercise, the control plane logic has already been

implemented. As part of bringing up the Mininet instance, the

make script will install packet-processing rules in the tables of

each switch. These are defined in the sX-runtime.json files, where

X corresponds to the switch number.

Step 2: Implement MRI

The mri.p4 file contains a skeleton P4 program with key pieces of

logic replaced by TODO comments. These should guide your

implementation---replace each TODO with logic implementing the

missing piece.

MRI will require two custom headers. The first header, mri_t,

contains a single field count, which indicates the number of switch

IDs that follow. The second header, switch_t, contains switch ID and

Queue depth fields of each switch hop the packet goes through.

One of the biggest challenges in implementing MRI is handling the

recursive logic for parsing these two headers. We will use a

parser_metadata field, remaining, to keep track of how many

switch_t headers we need to parse. In the parse_mri state, this

field should be set to hdr.mri.count. In the parse_swtrace state,

this field should be decremented. The parse_swtrace state will

transition to itself until remaining is 0.

The MRI custom headers will be carried inside an IP Options

header. The IP Options header contains a field, option, which

indicates the type of the option. We will use a special type 31 to

indicate the presence of the MRI headers.

Beyond the parser logic, you will add a table in egress, swtrace to

store the switch ID and queue depth, and actions that increment the

count field, and append a switch_t header.

A complete mri.p4 will contain the following components:

- Header type definitions for Ethernet (

ethernet_t), IPv4 (ipv4_t), IP Options (ipv4_option_t), MRI (mri_t), and Switch (switch_t). - Parsers for Ethernet, IPv4, IP Options, MRI, and Switch that will

populate

ethernet_t,ipv4_t,ipv4_option_t,mri_t, andswitch_t. - An action to drop a packet, using

mark_to_drop(). - An action (called

ipv4_forward), which will:- Set the egress port for the next hop.

- Update the ethernet destination address with the address of the next hop.

- Update the ethernet source address with the address of the switch.

- Decrement the TTL.

- An ingress control that:

- Defines a table that will read an IPv4 destination address, and

invoke either

droporipv4_forward. - An

applyblock that applies the table.

- Defines a table that will read an IPv4 destination address, and

invoke either

- At egress, an action (called

add_swtrace) that will add the switch ID and queue depth. - An egress control that applies a table (

swtrace) to store the switch ID and queue depth, and callsadd_swtrace. - A deparser that selects the order in which fields inserted into the outgoing packet.

- A

packageinstantiation supplied with the parser, control, checksum verification and recomputation and deparser.

Step 3: Run your solution

Follow the instructions from Step 1. This time, when your message

from h1 is delivered to h2, you should see the sequence of

switches through which the packet traveled plus the corresponding

queue depths. The expected output will look like the following,

which shows the MRI header, with a count of 2, and switch ids

(swids) 2 and 1. The queue depth at the common link (from s1 to

s2) is high.

got a packet

###[ Ethernet ]###

dst = 00:04:00:02:00:02

src = f2:ed:e6:df:4e:fa

type = 0x800

###[ IP ]###

version = 4L

ihl = 10L

tos = 0x0

len = 42

id = 1

flags =

frag = 0L

ttl = 62

proto = udp

chksum = 0x60c0

src = 10.0.1.1

dst = 10.0.2.2

\options \

|###[ MRI ]###

| copy_flag = 0L

| optclass = control

| option = 31L

| length = 20

| count = 2

| \swtraces \

| |###[ SwitchTrace ]###

| | swid = 2

| | qdepth = 0

| |###[ SwitchTrace ]###

| | swid = 1

| | qdepth = 17

###[ UDP ]###

sport = 1234

dport = 4321

len = 18

chksum = 0x1c7b

###[ Raw ]###

load = 'P4 is cool'

Troubleshooting

There are several ways that problems might manifest:

-

mri.p4fails to compile. In this case,makewill report the error emitted from the compiler and stop. -

mri.p4compiles but does not support the control plane rules in thesX-runtime.jsonfiles thatmaketries to install using a Python controller. In this case,makewill log the controller output in thelogsdirectory. Use these error messages to fix yourmri.p4implementation. -

mri.p4compiles, and the control plane rules are installed, but the switch does not process packets in the desired way. The/tmp/p4s.<switch-name>.logfiles contain trace messages describing how each switch processes each packet. The output is detailed and can help pinpoint logic errors in your implementation. Thebuild/<switch-name>-<interface-name>.pcapalso contains the pcap of packets on each interface. Usetcpdump -r <filename> -xxxto print the hexdump of the packets. -

mri.p4compiles and all rules are installed. Packets go through and the logs show that the queue length is always 0. Then either reduce the link bandwidth intopology.json.

Cleaning up Mininet

In the latter two cases above, make may leave a Mininet instance

running in the background. Use the following command to clean up

these instances:

make stop

Next Steps

Congratulations, your implementation works! Move on to Source Routing.